The SkyWatcher Star Adventurer mount is a good quality equatorial tracking mount for DSLR based astrophotography. It is reasonably portable, so combined with a sturdy tripod, it is well suited for travel when weight & space are at a premium. It is not restricted to night time usage either, providing an option for tracking the movement of The Sun, making it suitable for general solar imaging / eclipse chasing too.

The key to getting good results from any tracking mount is to take care when doing the initial setup and alignment. The Star Adventurer comes with an illuminated polar scope to make this process easier. The simple way to use this is to rotate it so that the clock positions (3, 6, 9, 12) have their normal orientation, and then use a smart phone application to determine where Polaris should appear on the clock face. The alternative way is to use the date / time graduation circles to calculate the positioning from the date and time. Learning this process is helpful if your phone batteries die, or you simply want to avoid bright screens at night time.

The explanation of how to use the graduation circles in the manual is not as clear as it should be though, so this post attempts to walk through the process with some pictures along the way.

Observing location properties

The first thing to determine is the longitude & latitude of the observing location, by typing “coordinates <your town name>” into Google. In the case of Minneapolis it replies with

44.9778° N, 93.2650° W

93.2650° W - 90° W == 3.2650° W

Rough tripod alignment & mount assembly

Even though the Star Adventurer is a small portable mount, the combination of the mount, one or more cameras, and lens / short tube telescopes will have considerable weight. With this in mind, don’t try to get away with a light or compact tripod, use the strongest and heaviest tripod that you have available to support it well. When travelling, a trade off may have to be made to cope with luggage restrictions, which in turn can limit the length of exposures you can acquire and/or make it more susceptible to wind and vibrations. To increase the rigidity of any tripod, avoid fully extending the legs and keep them widely spaced. If the tripod has bracing between the legs use that, and if possible hang a heavy object beneath the tripod to damp any vibrations.

With the tripod erected, the first step is to attach the equatorial wedge. The tripod should be oriented so that the main latitude adjustment knob on the wedge is pointing approximately north. Either locate Polaris in the sky, or use a cheap hand held compass, or even a GPS app on a smart phone to determine north.

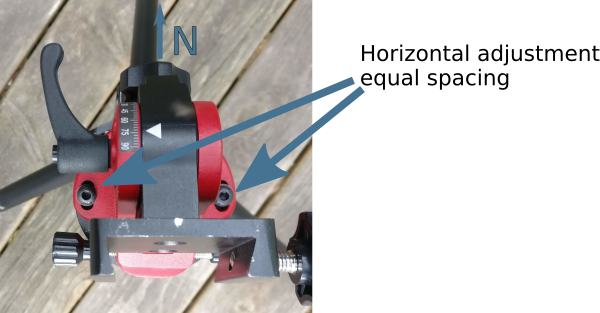

At this time also make sure that the two horizontal adjustment knobs are set to leave an equal amount of slack available in both directions. This will be needed when we come to fine tune the polar alignment later.

At this time also make sure that the two horizontal adjustment knobs are set to leave an equal amount of slack available in both directions. This will be needed when we come to fine tune the polar alignment later.

Now adjust the tripod legs to make the base of the wedge level, using the built-in omnidirectional spirit level to gauge it.

Now adjust the tripod legs to make the base of the wedge level, using the built-in omnidirectional spirit level to gauge it.

The final part of the approximate alignment process is to use the altitude adjustment knob on the wedge to set the angle to match the current observing location latitude. As noted earlier the latitude of Minneapolis is 44.9778° N, so the altitude should be set to 45 too. Each major tick in the altitude scale covers 15°, and is subdivided into 5 minor ticks each covering 3°.

At this point the main axis of the mount should be pointing near to the celestial north pole, but this is not anywhere near good enough to avoid star trailing. The next step will to do the fine polar alignment.

Checking polar scope pattern calibration

For a mount that has not yet been used, it is advisable to check the calibration of the polar scope pattern, as it may not be correct upon delivery, especially if the unit has been used for demo purposes by the vendor or was a previous customer return. Once calibrated, it should stay correct for the lifetime of the product, so this won’t need repeating every time. Skip to the next heading if you know the pattern is correctly oriented already.

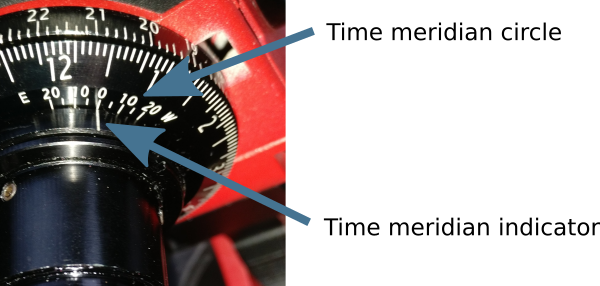

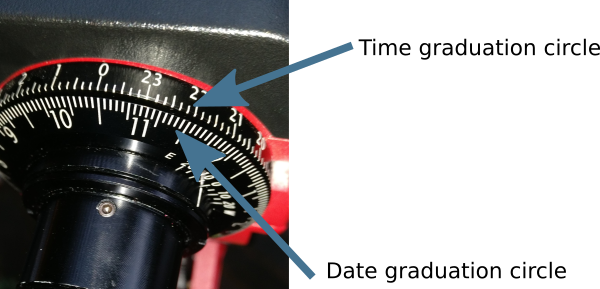

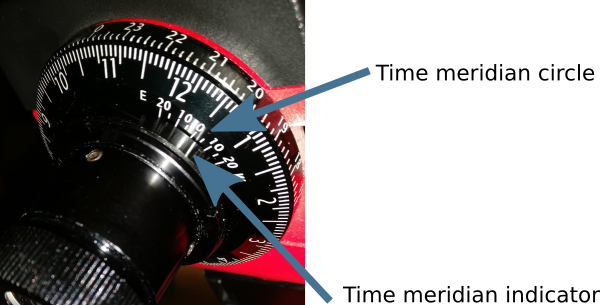

The rear of the main body has two graduated and numbered circles tracking time and date. The outer circle is fixed against the body and marked with numbers 0-23. Each of the large graduation marks represents 1 hour, while the small graduation marks represent 10 minutes each. The inner circle rotates freely and is marked with numbers 1 through 12. Each of the large graduation marks represents 1 month, while the small graduation marks represent approximately 2 days each. The inner circle has a second scale marked on it, with numbers 20, 10, 0, 10, 20 representing the time meridian offset in degrees. The eyepiece has a single white line painted on it which is the time meridian indicator.

To check calibration the inner circle needs to be rotated so that the time meridian circle zero position aligns with the time meridian indicator on the eyepiece.

The zero position on the time meridian circle is aligned with the time meridian indicator mark on the eyepiece.

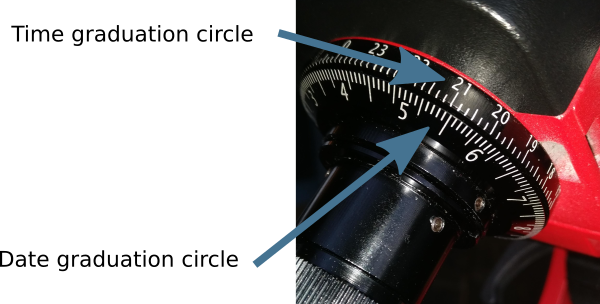

Now while being careful not to move the inner circle again, the mount axis / eyepiece needs to be rotated so that the zero mark on outer time graduation circle aligns with the date graduation circle Oct 31st mark (the big graduation between the 10 and 11 numbers).

While not moving the inner cicle, the mount axis / eyepiece is rotated so that the number zero on the time graduation circle lines up with the large graduation between the 10 and 11 marks on the date graduation circle.

These two movements have set the mount to the date and time where Polaris will be due south of the north pole. Thus when looking through the eyepiece, the polar alignment pattern should appear with normal orientation, 6 at the bottom, 9 to the left, 3 to the right and 0 at the top. If this is not the case, then a tiny allen key needs to be used to loosen the screws holding the pattern, which can then be rotated to the correct orientation.

As mentioned above this process only needs to be done once when first acquiring the mount. Perhaps check it every 6-12 months, but it is very unlikely to have moved unless the screws holding the pattern were not tightened correctly.

Polar alignment procedure

After getting the tripod setup with the wedge attached and main body mounted, the process of polar alignment can almost begin. First it is recommended to attach the mount assembly dovetail bar and any cameras to the mount body. It is possible to attach this after polar alignment, but there is the risk of movement on the mount which can ruin alignment. The only caveat with doing this is that with many versions of the mount it is impossible to attach the LED polar illuminator once the dovetail is attached. Current generations of the product ship an shim to solve this problem, while for older generations an equivalent adapter can be created with a 3-d printer and can often be found pre-printed on ebay.

Earlier the difference in longitude between the timezone meridian and the current observing location was determined to be 3.2650° W. The inner graduated disc on the mount needs to be rotated so that the time meridian indicator on the eyepiece points to the time meridian circle position corresponding to 3.2650° W

The time meridian indicator is aligned with the time meridian circle position corresponding to 3 W, which is the offset between the current observing location and the timezone meridian.

Now without moving the inner dial the main mount axis / eyepiece needs to be rotated to align the time graduation circle with the date graduation circle to match the current date and time. It is important to use the time without daylight saving applied. For example if observing on May 28th at 10pm, the time graduation circle marking for 21 needs to be used, not 22. May is the 5th month, and with each small graduation corresponding to 2 days, the date graduation circle needs to aligned for the graduation just before the big marker indicating June 1st.

The time graduation circle marking for 21 is aligned with the date graduation circle marking for May 28th.

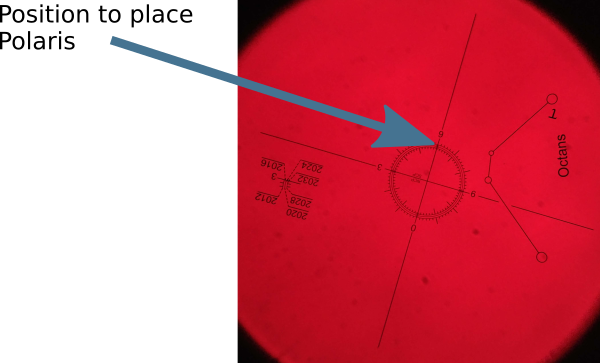

The effect of these two movements is to rotate the polar scope pattern so that the 6 o’clock position is pointing to where Polaris is supposed to lie. Hopefully Polaris is visible through the polar scope at this point, but it is very unlikely to be at the right position. The task is now to use the latitude adjustment knob and two horizontal adjustment knobs to fine tune the mount until Polaris is exactly at the 6 o’clock position on the pattern.

View of pattern through polar scope when set for 10pm on May 31st in Minneapolis, which is almost completely upside down. Polaris should be placed at the 6 o’clock position on the pattern.

Notice that the polar scope pattern has three concentric circles and off to the side of the pattern there are some year markings. Polaris gradually shifts from year to year, so check which of the concentric rings needs to be used for the current observing year.

The mount is now correctly aligned with the North celestial pole and should accurately track rotate of the Earth to allow exposures several minutes long without stars trailing. All that remains is to turn the power dial to activate tracking. One nice aspect of equatorial mounts compared to alt-az mounts, is that they can be turned off/on at will with no need to redo alignment. When adding or removing equipment, however, it is advisable to recheck the polar scope to ensure the mount hasn’t shifted its pointing.